Ariel University

Course name: Deep learning and natural language processing

Course number: 7061510-1

Lecturer: Dr. Amos Azaria

Edited by: Moshe Hanukoglu

Date: First Semester 2018-2019

Based on presentations by Dr. Amos Azaria

Natural Language Tool-Kit (NLTK)

For the computer to understand what we are talking about, we need to simplify the text and analyze it.

One of the libraries that helps us a lot in this area is nltk.

You have to install nltk.

Write in the terminal: pip install nltk

Before you run the code you have to run the first and second command:

import nltk

nltk.download('all')

You have to run the second command only in the first time you use this notebook.

import nltk

nltk.download('all')

To separate the sentence into words there are several methods.

The first method is separation by spaces.

my_text = "Where is St. Paul located? I don't seem to find it. It isn't in my map."

my_text.split(" ") # or my_text.split()

But this method is not good because there are many cases where it does not work well.

For example, "Dad went home." The point at the end of the sentence does not belong to the last word, but the above path does not separate the point from the last word.

So we'll use a second method that belongs to nltk, which separates the text into sentences and words correctly.

Tokenize

from nltk.tokenize import word_tokenize, sent_tokenize

sent_tokenize(my_text)

word_tokenize(my_text)

In addition, we want to make all words into their basic form. For example, walking, walking, and walk are all different rows of walk.

Stemmer

from nltk.stem import PorterStemmer

from nltk.tokenize import word_tokenize

ps = PorterStemmer()

my_text = "Whoever eats many cookies is regretting doing so"

stemmed_sentence = []

for word in word_tokenize(my_text):

stemmed_sentence.append(ps.stem(word))

stemmed_sentence

After separating the text into single words and finding the "root" of the word, we would like to know the syntactic role of each of the words in the sentence.

Part of Speech Tagging (POS)

my_tokenized_text = word_tokenize(my_text)

nltk.pos_tag(my_tokenized_text)

The following command shows us all the part of speech tagging.

nltk.help.upenn_tagset()

After we have seen a way to obtain the syntactic role of each word in the text we can now improve the accuracy of finding the root of the words in the sentence.

This method is better than stemming but is more complex.

Lemmatization

The WordNetLemmatizer in NLTK uses a shorter list of part of speech tags than the Penn TreeBank, (so we use a short function to make the conversion).

from nltk.corpus import wordnet as wndef is_noun(tag):

return tag in ['NN', 'NNS', 'NNP', 'NNPS']def is_verb(tag):

return tag in ['VB', 'VBD', 'VBG', 'VBN', 'VBP', 'VBZ']def is_adverb(tag):

return tag in ['RB', 'RBR', 'RBS']def is_adjective(tag):

return tag in ['JJ', 'JJR', 'JJS']def penn2wn(tag):

if is_adjective(tag):

return wn.ADJ

elif is_noun(tag):

return wn.NOUN

elif is_adverb(tag):

return wn.ADV

elif is_verb(tag):

return wn.VERB

return wn.NOUN

from nltk.tokenize import word_tokenize

from nltk import pos_tag

from nltk.stem.wordnet import WordNetLemmatizer

lzr = WordNetLemmatizer()

my_text = "Whoever eats many cookies is regretting doing so"

lemed = []

for (word,pos) in nltk.pos_tag(word_tokenize(my_text)):

lemed.append(lzr.lemmatize(word,penn2wn(pos)))

lemed

After we divided the sentence into words and analyzed them, we now want to find small parts of the sentence called Chunking. These sets of words allow us to treat the word not only as a single word but in a sentence so that we can better understand its context.

In order to find such groups of words, regular expressions are constructed that represent the structure we want to find.

For example: "{< DT >? < JJ > * < NN >}"

This expression means that we are looking for a sentence structure which will appear at most once DT each amount of JJ and once NN.

To learn about the signs of regular expressions see link

Chunking

my_text = "Dogs or small cats saw Sara, John, Tom, the pretty girl and the big bat"

tagged = nltk.pos_tag(nltk.tokenize.word_tokenize(my_text))

grammar = """NP: {<DT>?<JJ>*<NN.?>}

NounList: {(<NP><,>?)+<CC><NP>}"""

cp = nltk.RegexpParser(grammar)

result = cp.parse(tagged)

print(result)

In order to see the analysis of the chunk in a visual way

result.draw()

There are cases where the methods we have learned so far are not enough to analyze the sentence because the sentence consists of the terms and names of people, places, organizations, and so on, which are checked only when they refer to the whole phrase together and not each word separately.

So we will use the following library to more accurately analyze these expressions

Named Entity Recognition (NER)

result = nltk.ne_chunk(nltk.pos_tag(nltk.word_tokenize("Bill Clinton is the president of the United States")))

print (result)

result.draw()

N-Grams

Before we go any further, we'll get a bit ahead of the N-Gram function.

This function creates groups of consecutive words the size of N.

By creating these sequences we can also get a perspective on the entire context of the word and not just look at the word in itself.

text = "It is a simple text this, this is a simple text, is it simple?"

list(nltk.ngrams(nltk.word_tokenize(text),3))

Create random story by N-Grams

import urllib

from random import randint

paragraph_len = 100

all_text = urllib.request.urlopen("https://s3.amazonaws.com/text-datasets/nietzsche.txt").read().decode("utf-8") #for Python 2 remove "request"

tokens = nltk.word_tokenize(all_text)

my_grams = list(nltk.ngrams(tokens,3))

random words for the beginning of the story.

We will execute a 3-gram function on the text.

We will propose the beginning of the story the first two words and then basically look within all three triangles created by the 3-gram function of the trios that contain the first two parts the last two words added to the story and add the third word from the trio to the array.

From the array we will select the next word in principle.

Now we will perform the same actions again, but now we will take the new word that joined the story and the previous word and attached it as the two words we will search for in the triples of the 3-gram.

We will continue this way as many times as we want (with us it will be paragraph_len times).

sentence = ["It", "is"]

for i in range(paragraph_len):

options = []

for trig in my_grams:

if trig[0].lower() == sentence[len(sentence)-2].lower() and trig[1].lower() == sentence[len(sentence)-1].lower():

options.append(trig[2])

if len(options) > 0:

sentence.append(options[randint(0, len(options)-1)])

print(" ".join(sentence))

Context Free-Grammar (CFG)

As you have learned in Automatons and Formal Languages. CFG are composed of four components:

T: terminal vocabulary (the words of the language being defined)

N: non-terminal vocabulary

P: a set of productions of the form a -> b, (a is a non-terminal and b is a sequence of one or more symbols from T U V)

S: the start symbol (member of N)

To read about the Context Free-Grammar, you can read the following link

As we have done so far, in this method, too, we will want to accept a certain structure of a sentence so that it will be easier for us to analyze it and understand the meaning of each of its parts.

CFG Parser

Similar to Chunking's search by regular expressions, so we can also find the structure of a sentence by Context Free-Grammar.

We will note our grammar and seek to find the form of construction of the sentence by the grammar we have given to the system.

grammar1 = nltk.CFG.fromstring("""

S -> NP VP

VP -> V NP | V NP PP

PP -> P NP

V -> "saw" | "ate" | "walked"

NP -> "John" | "Mary" | "Bob" | Det N | Det N PP

Det -> "a" | "an" | "the" | "my"

N -> "man" | "dog" | "cat" | "telescope" | "park"

P -> "in" | "on" | "by" | "with" """)

sentence = nltk.word_tokenize("Mary saw Bob")

rd_parser = nltk.RecursiveDescentParser(grammar1)

print(list(rd_parser.parse(sentence))[0])

print(list(rd_parser.parse("Mary saw a dog with my telescope".split()))[0])

(list(rd_parser.parse("Mary saw a dog with my telescope".split()))[0]).draw()

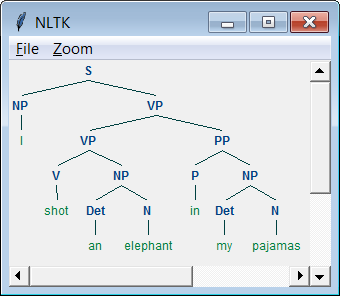

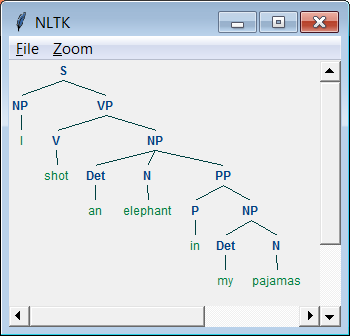

The drawback of the grammatical is that twice there are several ways for the same sentence we will now represent one such case.

The following sentences are ambigious.

|

|

groucho_grammar = nltk.CFG.fromstring("""

S -> NP VP

PP -> P NP

NP -> Det N | Det N PP | 'I'

VP -> V NP | VP PP

Det -> 'an' | 'my'

N -> 'elephant' | 'pajamas'

V -> 'shot'

P -> 'in'

""")

sent = ['I', 'shot', 'an', 'elephant', 'in', 'my', 'pajamas']

parser = nltk.ChartParser(groucho_grammar)

for tree in parser.parse(sent):

print(tree)

tree.draw()

CYK Algorithm

- The CYK algorithm which uses dynamic programing is used in order to find a tree (a constituency parse)

- Complexity of the CYK is O( $n^3 |G|$), where n is the length of the sentence, and G is the grammar size.

- We will go over the details in the appendix classes

This method is built as a CFG Parser and has a probability addition to each of the transitions in grammar, in such a way we can see the probability of obtaining a given sentence by the structure of the suggested grammar.

Now even if there are two options to construct the same sentence, we can choose the more likely option ie the possibility that it has a greater probability

grammar = nltk.PCFG.fromstring("""

S -> NP VP [1.0]

VP -> TV NP [0.4]

VP -> IV [0.3]

VP -> DatV NP NP [0.3]

TV -> 'saw' [1.0]

IV -> 'ate' [1.0]

DatV -> 'gave' [1.0]

NP -> 'telescopes' [0.8]

NP -> 'Jack' [0.2] """)

viterbi_parser = nltk.ViterbiParser(grammar)

for tree in viterbi_parser.parse(['Jack', 'saw', 'telescopes']):

print(tree)

CoreNLP

CoreNLP is more powerful than NLTK, and includes the following features, which we will examine next:

- A large built-in grammar

- Dependency Parsing

- Coreference Resolution

A large built-in grammar

We can load grammars into our software. This does not restrict us from remaining with the defaults specified by the directory.

Dependency Parsing

We can check the dependence of another word in the sentence.

Before you run this code you sould read this link

from nltk.parse.stanford import StanfordDependencyParser

path_to_jar = 'path_to/stanford-parser-full-2014-08-27/stanford-parser.jar'

path_to_models_jar = 'path_to/stanford-parser-full-2014-08-27/stanford-parser- 3.4.1-models.jar'

dependency_parser = StanfordDependencyParser(path_to_jar=path_to_jar, path_to_models_jar=path_to_models_jar)result = dependency_parser.raw_parse('I shot an elephant in my sleep')

dep = result.next()

list(dep.triples())

Sentiment Analysis

There are many cases where we want to know the feelings of the sentence, so let us check through as the trial contains negative / positive content.

from nltk.sentiment.vader import SentimentIntensityAnalyzer

sna = SentimentIntensityAnalyzer()

In case you get a warning to look at the link

Note: Compound is a normalized value between -1 (negative) to +1 (positive).

sna.polarity_scores("The movie was great!")

sna.polarity_scores("I liked the book, especially the ending.")

sna.polarity_scores("The staff were nice, but the food was terrible.")